ByteDance’s Seaweed Redefines Video Generation Efficiency

Hello AI enthusiasts,

This week, we’re diving into a game-changing development from ByteDance, the tech giant behind TikTok. Meet Seaweed, a 7-billion-parameter video generation model that’s making waves by punching way above its weight. In a world where AI models often rely deepened on massive computational resources, Seaweed is proving that efficiency is the new superpower.

What Makes Seaweed Special?

ByteDance’s Seaweed is a lean, mean video-generating machine. Despite its modest 7B-parameter size—significantly smaller than many competitors—it delivers performance that rivals industry heavyweights like Kling 1.6, Google Veo, and Alibaba’s Wan 2.1. How? By optimizing its training process to use far fewer compute resources, reportedly trained on just 665,000 hours of H100 GPU data. To put that in perspective, many top-tier models demand millions of GPU hours to achieve similar results.

Seaweed supports a versatile range of tasks:

Text-to-video: Transform written prompts into vivid clips.

Image-to-video: Animate still images into dynamic sequences.

Audio-driven synthesis: Create videos synced with sound inputs.

With the ability to generate clips up to 20 seconds long, Seaweed is poised to empower creators, advertisers, and developers with high-quality outputs at a fraction of the usual cost.

Why It Matters

The AI video generation space is heating up, with models like Kling AI, Google Veo, and Wan 2.1 pushing boundaries in quality and scale. But Seaweed’s arrival signals a shift toward cost-effective innovation. Its efficiency could democratize access to advanced video tools, making them viable for smaller businesses, independent creators, and startups that lack the budgets for compute-heavy solutions.

This also highlights ByteDance’s growing influence in AI. Known for its social media dominance, the company is now flexing its muscles in generative tech, following earlier successes like Goku and OmniHuman-1. Seaweed’s ability to match or outperform larger models suggests ByteDance is mastering the art of doing more with less—a trend that could reshape the AI landscape.

The Bigger Picture

Seaweed’s debut comes at a time when the AI community is grappling with sustainability concerns. Training massive models consumes enormous energy, raising questions about environmental impact. By prioritizing efficiency without sacrificing quality, Seaweed sets a new benchmark for eco-conscious AI development. It’s a reminder that innovation isn’t just about building bigger—it’s about building smarter.

What’s Next?

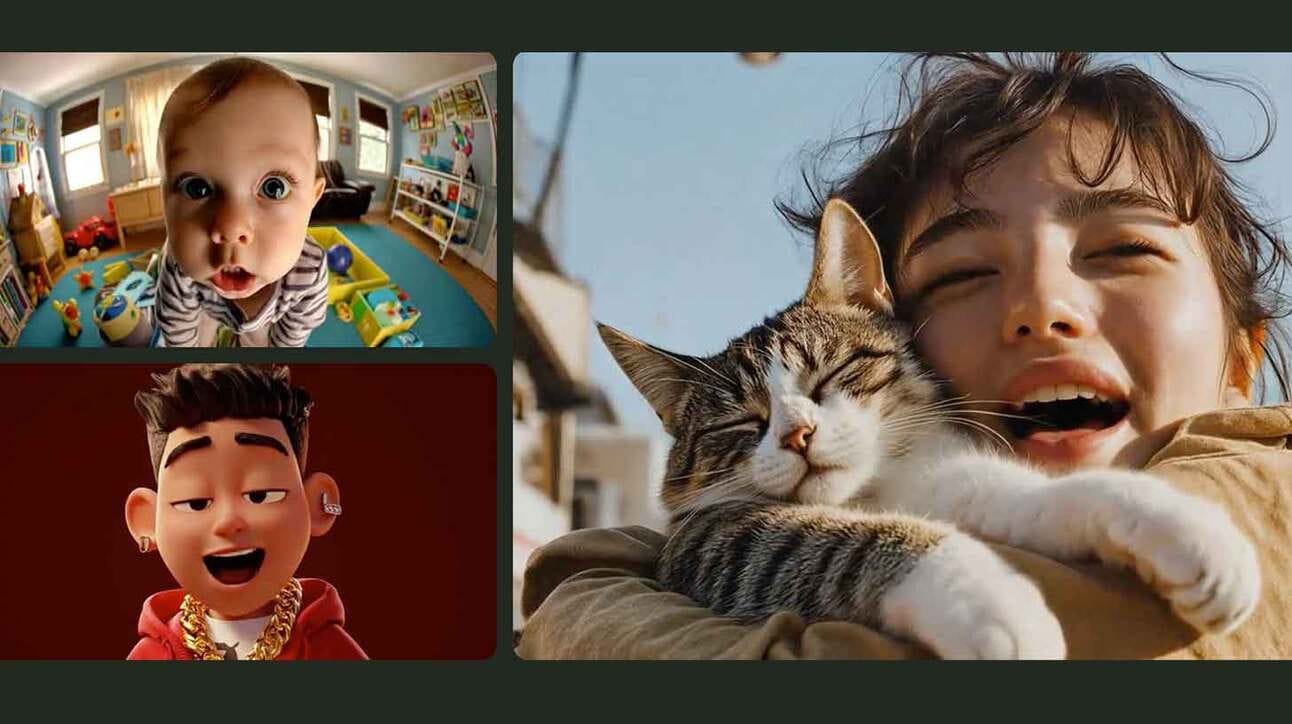

While Seaweed is still fresh, early demos shared by ByteDance showcase its potential: crisp visuals, smooth motion, and impressive prompt adherence. The model’s scalability also hints at future iterations that could push the envelope further. For now, it’s not publicly available, but keep an eye on ByteDance’s moves—this could be the start of a new era in video generation.

Quick AI Bites

Kuaishou’s Kling AI 2.0 claims the title of “world’s most powerful” video model, boasting 22 million users globally.

Alibaba’s Wan 2.1 goes open-source, challenging Sora and Veo with a leading 84.7% VBench score.

Google Veo 2 continues to impress with lifelike outputs via its VideoFX platform, though public access remains limited.

Thought of the Week

“Efficiency in AI isn’t just about saving resources—it’s about unlocking creativity for everyone.”

What do you think about Seaweed’s approach? Could lean models like this redefine the future of AI? Hit reply and share your thoughts!

Until next week, stay curious and keep innovating.